< Back

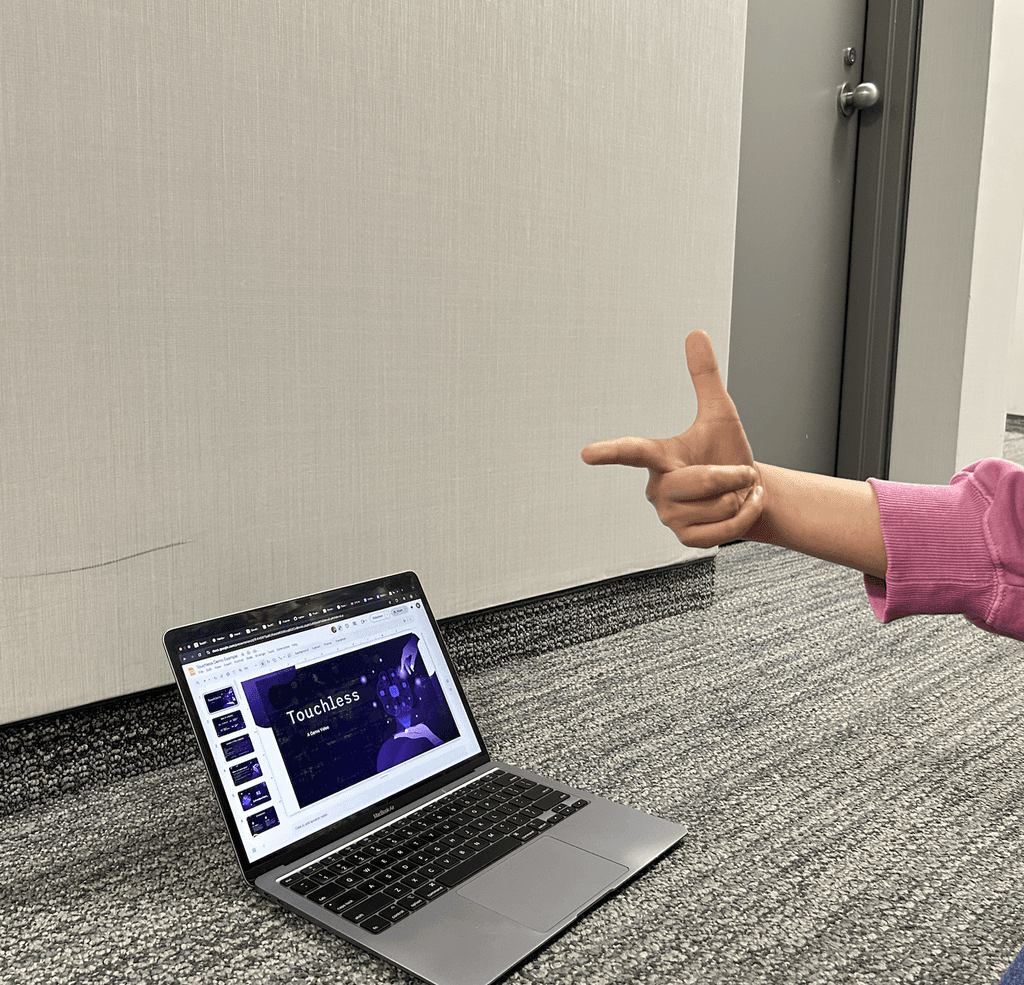

Gesture control

We developed six gestures to control devices to perform common interactions when user is giving presentation using slides.

Our project is powered by OpenCV for camera input & display, MediaPipe for precise hand landmark detection, PyAutoGUI for sending real keyboard shortcuts to Google Slides or PowerPoint, and optional SpeechRecognition/ElevenLabs for voice and note-taking. These components work together to let hand gestures directly control your live slideshow and optionally capture spoken notes.

Broader Use-Cases Review

Here we demonstrate gesture control with presentation slides as an example task. Beyond this, gestures can be customized and extended to a wide range of interactions, for instance, navigating documents, controlling media playback, or managing video calls in everyday work. In professional and public settings, gestures can streamline classroom presentations, workshops, or conferences without breaking flow. In hygiene-sensitive environments such as hospitals, labs, or kitchens, where any interactions could be a contamination risk, Touchless can reduce any interactions by 80%, leading to a significant decrease in technological interaction, and increase of workflow. In the future, our goal is to add gestures that can be adapted for smart home systems, from adjusting lighting to operating appliances, making interaction more seamless across contexts.

Start

Take notes

Next

Back

Pause

Exit

Review

Our application, Touchless for Gestures, is about 60% complete due to time restraints. For larger scale classrooms, we would implement an ai pan-tilt-zoom camera, that will follow the speaker. This implementation can increase accuracy by 70%, as most of usage will be long range (ex. projectors, televisions).